GPQA benchmark leaderboard: testing LLMs on graduate-level science questions

- BRACAI

- Jul 17, 2024

- 2 min read

Updated: 7 hours ago

Ever wondered which AI model is best at hard science?

The GPQA diamond benchmark is one of the strongest tools we have to measure this.

It tests how well AI models handle graduate-level questions in biology, physics, and chemistry, and it is designed to be difficult even for PhD-level experts.

Why should you care?

GPQA is not a trivia quiz. It is a brutally hard science test.

For LLMs, it is one of the best proxies we have for:

domain knowledge you can trust

step-by-step scientific reasoning

fewer confident wrong answers

So if your work touches research, engineering, healthcare, climate, or technical content, GPQA scores are a useful signal when choosing an AI model.

Not sure which AI model to pick?

Read our full guide to the best LLMs

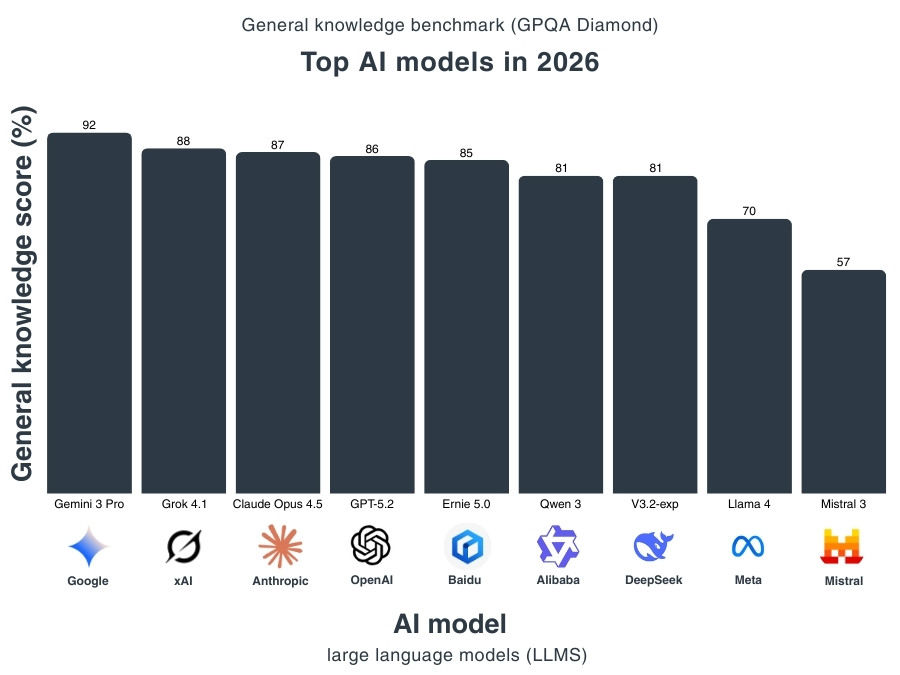

Best LLM on the GPQA diamond benchmark (leaderboard)

The GPQA diamond benchmark shows something interesting: Even with harder questions, frontier models are still scoring very high.

The top tier stays strong

Gemini 3 Pro leads with 92%

Grok 4 follows at 88%

Claude Opus scores 87%

OpenAI’s ChatGPT model comes in at 86%

At this level, the question is no longer “can it answer science questions?”

It is “how reliably can it handle the hardest expert-level ones?”

The gap grows fast below the frontier

Europe’s Mistral scores 57%, far behind the leaders.

That gap matters if you need consistent performance on complex technical work.

What is the GPQA benchmark?

GPQA stands for "graduate-level Google-proof Q&A".

It was introduced by Rein et al. (2023) to evaluate how well LLMs handle questions that require real scientific expertise.

The test includes 448 questions across:

Biology

Physics

Chemistry

It is extremely difficult for humans:

PhD-level experts average around 65%

Skilled non-experts, even with full web access, reach only 34%

That is why GPQA has become a key benchmark for testing scientific reasoning.

What is the GPQA diamond benchmark?

So it is the big brother of the GPQA benchmark. The questions have become harder.

It includes only the most challenging 198 questions, selected to separate true experts from everyone else.

The gap is striking:

A test where top human experts still struggle is now close to solvable by the best AI systems.

That is why GPQA diamond matters. It is one of the few benchmarks left that can still distinguish the very best models from the rest.

Ready to apply AI to your work?

Benchmarks are useful, but real business impact is about execution.

We run hands-on AI workshops and build tailored AI solutions, fast.

Comments